Shell Shocked: Wire an LLM Directly into Your Linux Terminal

- The Philosophy: The Terminal Revolution You Didn't See Coming

- What Is arkterm?

- Why Your Terminal Needs AI (And Why Now)

- Getting Started: From Zero to AI Terminal in 60 Seconds

- Core Features That Make arkterm Shine

- Advanced Use Cases

- The Technical Architecture

- Why Groq? The Speed Advantage

- Configuration and Customization

- Best Practices and Pro Tips

- The Future of AI-Native Terminals

- Getting Involved

- Conclusion: Your Terminal, Supercharged

Shell Shocked: Wire an LLM Directly into Your Linux Terminal

The Philosophy: The Terminal Revolution You Didn’t See Coming

Inspired by a Wrap.dev engineer’s description of their interface as a way to “tell your computer what to do” rather than just chat, I explored Wrap.dev and developed my own minimal viable product for development. This project draws inspiration from Wrap.dev, aiming to create a comparable tool, though less sophisticated.

Picture this: You’re staring at a blank terminal, trying to remember the exact find command syntax to locate all Python files modified in the last week. Instead of fumbling through man pages or Stack Overflow, you type:

> find all python files changed in the last 7 daysAnd your terminal responds with the exact command you need, explains what it does, and offers to run it for you. Welcome to arkterm – where your Linux terminal becomes an AI-native workspace.

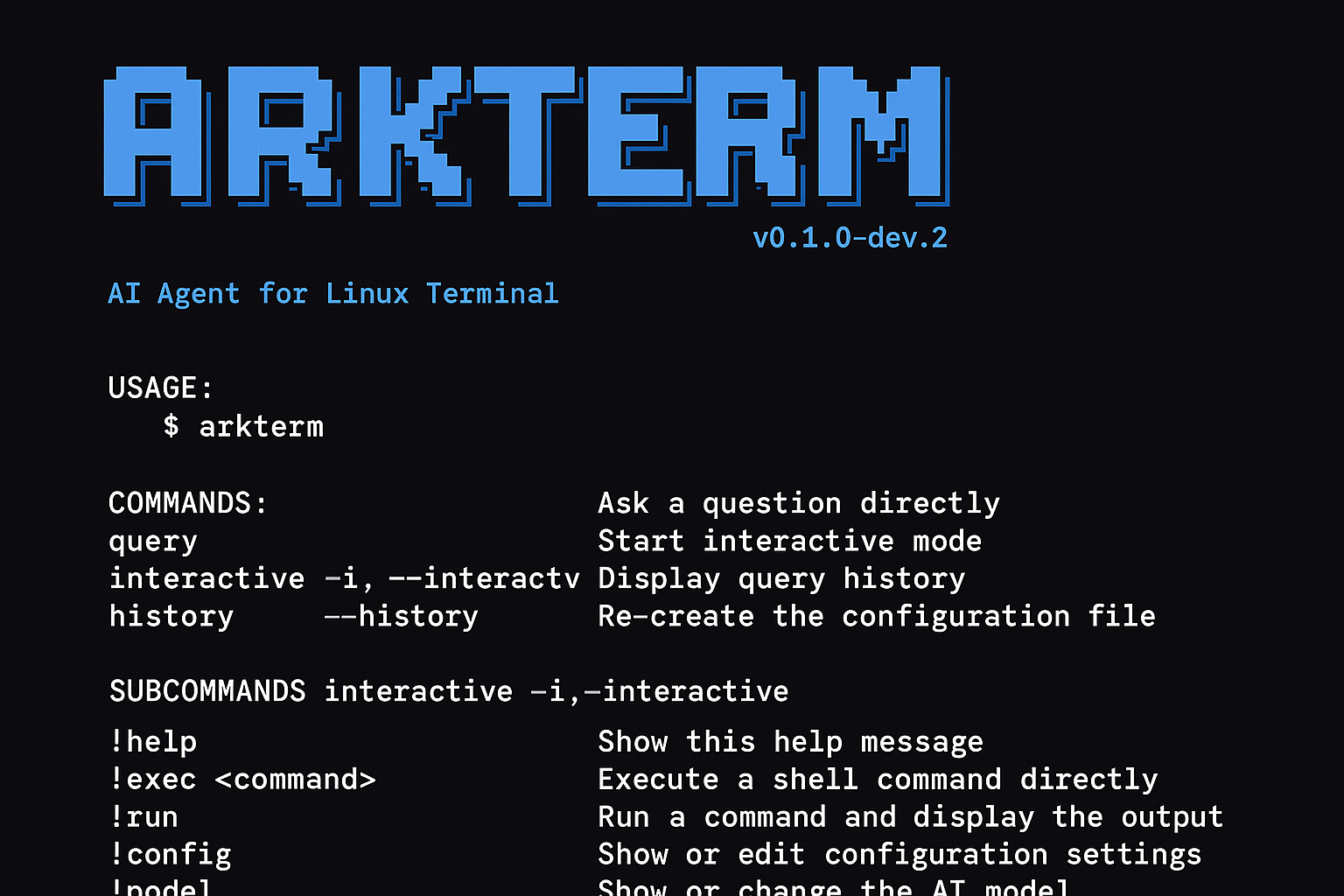

What Is arkterm?

arkterm is an open-source tool that transforms your terminal into an intelligent assistant by integrating large language models directly into your shell environment. Unlike traditional chatbots that live in browsers, arkterm brings AI into the very heart of your development workflow – the command line.

Built with Python and powered by Groq’s lightning-fast LLM API, arkterm bridges the gap between natural language and shell commands, making your terminal understand context, intent, and even your current project structure.

Why Your Terminal Needs AI (And Why Now)

The Problem with Traditional Command Lines

Modern developers juggle hundreds of commands across multiple tools, frameworks, and systems. The cognitive overhead of remembering syntax, flags, and edge cases slows down even experienced engineers. Traditional solutions like aliases and scripts only scratch the surface.

The AI Advantage

arkterm leverages the power of models like Llama 3.1 and Mixtral to:

- Understand Context: Knows your current directory, project type, and system environment

- Translate Intent: Converts natural language queries into precise shell commands

- Explain Actions: Shows you what commands do before executing them

- Learn Patterns: Adapts to your workflow and preferences

Getting Started: From Zero to AI Terminal in 60 Seconds

Prerequisites

- Python 3.10 or higher

- A free Groq API key (get one here)

- uv package manager (optional but recommended)

Installation

# Clone the repository

git clone https://github.com/saadmanrafat/arkterm.git

cd arkterm

# Install with uv (recommended)

uv pip install -e .

# Or with pip

pip install -e .Initial Setup

# Create configuration file

arkterm --setupThis creates a YAML configuration file at ~/.aiterm/config.yaml:

API:

api_key: "YOUR_GROQ_API_KEY_HERE"

model: "deepseek-r1-distill-llama-70b"

api_base: "https://api.groq.com/openai/v1"

SETTINGS:

allow_command_execution: false

max_tokens: 2048Add your Groq API key and you’re ready to go!

Core Features That Make arkterm Shine

1. Natural Language Command Generation

Instead of memorizing complex syntax, describe what you want:

> arkterm "compress all log files older than 30 days"Output:

I'll help you compress log files older than 30 days. Here's the command:

find /var/log -name "*.log" -type f -mtime +30 -exec gzip {} \;

This command:

- Searches in /var/log directory

- Finds files with .log extension

- Filters files older than 30 days (-mtime +30)

- Compresses each file with gzip

2. Interactive Mode for Continuous Assistance

Launch interactive mode for ongoing AI assistance:

arkterm -i> check disk usage for directories over 1GB

Here's how to check disk usage for large directories:

```bash

du -h --max-depth=1 | grep '[0-9]G'

This command shows directory sizes and filters for those over 1GB.

> !exec du -h --max-depth=1 | grep '[0-9]G'

Executing: du -h --max-depth=1 | grep '[0-9]G'

2.1G ./node_modules

1.4G ./datasets

3.2G ./models3. Context-Aware Project Detection

arkterm automatically detects your project type and provides relevant suggestions:

def _detect_project_type(cwd: str) -> str:

"""Detects project type based on files in current directory"""

files = os.listdir(cwd) if os.path.exists(cwd) else []

if "package.json" in files:

return "Node.js project detected"

elif "requirements.txt" in files or "pyproject.toml" in files:

return "Python project detected"

elif "Cargo.toml" in files:

return "Rust project detected"

elif "go.mod" in files:

return "Go project detected"

elif ".git" in files:

return "Git repository detected"

else:

return "General directory"This context gets passed to the AI, enabling project-specific suggestions.

4. Safety-First Command Execution

arkterm prioritizes safety with built-in protections:

# Safety guidelines built into the system prompt

SAFETY_RULES = """

- Never execute commands that could damage the system

- Always warn about destructive operations (rm -rf, format, etc.)

- Prefer safer alternatives (mv to trash vs rm, etc.)

- Ask before modifying system files or configurations

"""Advanced Use Cases

DevOps Automation

> arkterm "show me all docker containers using more than 500MB memory"Response:

docker stats --format "table {{.Container}}\t{{.Name}}\t{{.MemUsage}}" --no-stream | awk 'NR>1 && $3 ~ /[5-9][0-9][0-9]M|[0-9]+G/ {print}'Log Analysis

> arkterm "find all 404 errors in nginx logs from last hour"Response:

grep "404" /var/log/nginx/access.log | awk -v cutoff=$(date -d '1 hour ago' '+%d/%b/%Y:%H:%M:%S') '$4 > "["cutoff'System Monitoring

> arkterm "alert me if CPU usage goes above 80%"Response:

while true; do

cpu=$(top -bn1 | grep "Cpu(s)" | awk '{print $2}' | sed 's/%us,//')

if (( $(echo "$cpu > 80" | bc -l) )); then

notify-send "High CPU Usage" "CPU usage is ${cpu}%"

fi

sleep 60

doneThe Technical Architecture

Core Components

To begin, establish a repository using UV, a Python package manager designed to streamline dependency management and virtual environments. Next, navigate to Groq (not Grok), register for their service, and get a free API key from their developer portal. Groq offers production-ready models such as gemma2-9b-it, meta-llama/Llama-Guard-4-12B, llama-3.1-8b-instant and more at no cost, though API rate limits and context token window restrictions apply. A comprehensive list of their supported models is provided.

The initial approach considered LangChain, but its intricate nature led to its exclusion from this project. As a result, we will use the requests library to communicate with Groq’s LLMs through API calls. It is important to note that Groq is not currently supported by LangChain. However, as engineers, we are adept at finding solutions.

# Core workflow

def fetch_response(query: str, config: Dict[str, Any]) -> str:

"""Main AI interaction pipeline"""

# 1. Gather system context

system_info = fetch_system_info()

# 2. Create enhanced prompt with context

system_prompt = get_enhanced_system_prompt(system_info)

# 3. Call Groq API

payload = {

"model": config["API"]["model"],

"messages": [

{"role": "system", "content": system_prompt},

{"role": "user", "content": query}

],

"max_tokens": config["SETTINGS"]["max_tokens"],

"temperature": 0.7

}

# 4. Parse and return response

response = requests.post(api_endpoint, json=payload)

return response.json()["choices"][0]["message"]["content"]Command Block Parsing

arkterm intelligently extracts executable commands from AI responses:

def parse_command_blocks(response: str) -> list[str]:

"""Extract command blocks from markdown-formatted AI responses"""

command_blocks = []

current_block = []

in_code_block = False

for line in response.splitlines():

stripped = line.strip()

if stripped.startswith("```"):

in_code_block = not in_code_block

if not in_code_block and current_block:

command_blocks.append("\n".join(current_block))

current_block.clear()

elif in_code_block:

current_block.append(line)

return command_blocksWhy Groq? The Speed Advantage

arkterm uses Groq’s inference engine for several key reasons:

- Blazing Fast: Sub-second response times for real-time terminal interaction

- Cost Effective: Free tier with generous limits perfect for CLI usage

- Multiple Models: Access to Llama 3.1, Mixtral, and Gemma models

- High Quality: Production-ready models with excellent code generation capabilities

Configuration and Customization

Model Selection

Switch between different models based on your needs:

# In interactive mode

> !model

Available Groq Models:

- llama3-8b-8192 (Fast, efficient)

- llama3-70b-8192 (More powerful)

- mixtral-8x7b-32768 (Mixtral model)

- gemma-7b-it (Google's Gemma model)

- deepseek-r1-distill-llama-70b (Distilled Llama model)

Currently using: llama3-8b-8192

Change model? (Enter model name or press Enter to keep current):Safety Configuration

Control command execution permissions:

SETTINGS:

allow_command_execution: true # Enable command execution

max_tokens: 4096 # Increase for longer responsesBest Practices and Pro Tips

1. Be Specific with Context

# Instead of: "delete old files"

# Try: "delete log files older than 7 days in /var/log"2. Use Interactive Mode for Complex Tasks

For multi-step operations, interactive mode maintains context:

arkterm -i

> I need to set up a Python web scraper project

> create a virtual environment for it

> install requests and beautifulsoup4

> create a basic scraper template3. Leverage Project Detection

arkterm automatically understands your project context:

# In a Node.js project

> arkterm "add a new dependency for testing"

# Suggests: npm install --save-dev jest

# In a Python project

> arkterm "add a new dependency for testing"

# Suggests: pip install pytestThe Future of AI-Native Terminals

arkterm represents just the beginning of AI-native command-line interfaces. Future developments might include:

- Local Model Support: Running models like Code Llama locally for offline operation

- Shell Integration: Direct shell hooks for seamless AI assistance

- Team Knowledge Bases: Shared command libraries and best practices

- Automated Workflows: AI-generated scripts for complex multi-step operations

Getting Involved

arkterm is open-source and welcomes contributions:

- GitHub: saadmanrafat/arkterm

- Issues: Report bugs or request features

- Pull Requests: Contribute code improvements

- Discussions: Share use cases and ideas

Conclusion: Your Terminal, Supercharged

arkterm transforms the humble terminal from a command interpreter into an intelligent assistant that understands your intent, explains its actions, and helps you work more efficiently. By bringing large language models directly into the command line, we’re not just changing how we interact with our computers – we’re fundamentally rethinking what a terminal can be.

Whether you’re a DevOps engineer managing complex infrastructure, a developer navigating unfamiliar codebases, or a power user who lives in the terminal, arkterm offers a glimpse into the future of human-computer interaction.

The age of typing cryptic commands from memory is ending. The era of conversational computing has begun.